music21.search.base¶

base classes for searching scores.

See User’s Guide, Chapter 43: Searching in and Among Scores for details.

SearchMatch¶

- class music21.search.base.SearchMatch(elStart, els, index, iterator)¶

A lightweight object representing the match (if any) for a search. Derived from namedtuple

SearchMatch bases

SearchMatch instance variables

- SearchMatch.elStart¶

The first element that matches the list.

- SearchMatch.els¶

A tuple of all the matching elements.

- SearchMatch.index¶

The index in the iterator at which the first element can be found

- SearchMatch.iterator¶

The iterator which produced these elements.

StreamSearcher¶

- class music21.search.base.StreamSearcher(streamSearch: Stream, searchList: list[Music21Object])¶

An object that can search through streams for a set of elements or notes or something of that sort.

Create a basic Stream first:

>>> thisStream = converter.parse('tinynotation: 3/4 c4. d8 e4 g4. a8 f4. c4. d4') >>> thisStream.show('text') {0.0} <music21.stream.Measure 1 offset=0.0> {0.0} <music21.clef.TrebleClef> {0.0} <music21.meter.TimeSignature 3/4> {0.0} <music21.note.Note C> {1.5} <music21.note.Note D> {2.0} <music21.note.Note E> {3.0} <music21.stream.Measure 2 offset=3.0> {0.0} <music21.note.Note G> {1.5} <music21.note.Note A> {2.0} <music21.note.Note F> {6.0} <music21.stream.Measure 3 offset=6.0> {0.5} <music21.note.Note C> {2.0} <music21.note.Note D> {3.0} <music21.bar.Barline type=final>

Let’s create something to search for:

>>> c = note.Note('C', quarterLength=1.5) >>> d = note.Note('D', quarterLength=0.5) >>> searchList = [c, d]

Now create a StreamSearcher:

>>> ss = search.StreamSearcher(thisStream, searchList)

searchList could also be a Stream in itself.

Let’s configure it for recursive search and to filter so only notes are there:

>>> ss.recurse = True >>> ss.filterNotes = True # or `.filterNotesAndRests`

Alternatively, we could have passed in a StreamIterator instead of thisStream.

Now let’s configure the algorithms:

>>> ss.algorithms [<function StreamSearcher.wildcardAlgorithm at 0x111b6e340>]

Wildcard search is a default algorithm that lets you use wildcards. I suggest you leave it in place and add to the algorithms list. We can add the rhythmAlgorithm to it:

>>> ss.algorithms.append(search.StreamSearcher.rhythmAlgorithm) >>> ss.algorithms [<function StreamSearcher.wildcardAlgorithm at 0x111b6e340>, <function StreamSearcher.rhythmAlgorithm at 0x11200000>]

Now run it:

>>> results = ss.run() >>> results [SearchMatch(elStart=<music21.note.Note C>, els=len(2), index=0, iterator=[...]), SearchMatch(elStart=<music21.note.Note G>, els=len(2), index=3, iterator=[...])]

>>> results[0].elStart.measureNumber 1 >>> results[1].elStart.measureNumber 2

Wildcards can be useful:

>>> searchStream2 = stream.Stream() >>> searchStream2.append(note.Note(quarterLength=0.5)) >>> searchStream2.append(search.Wildcard()) >>> searchStream2.append(note.Note(quarterLength=1.5))

>>> ss.searchList = searchStream2 >>> results = ss.run() >>> results [SearchMatch(elStart=<music21.note.Note D>, els=len(3), index=1, iterator=[...]), SearchMatch(elStart=<music21.note.Note A>, els=len(3), index=4, iterator=[...])] >>> results[0].els (<music21.note.Note D>, <music21.note.Note E>, <music21.note.Note G>) >>> [n.duration.quarterLength for n in results[0].els] [0.5, 1.0, 1.5]

StreamSearcher methods

- StreamSearcher.noteNameAlgorithm(streamEl: Music21Object, searchEl: Music21Object)¶

- StreamSearcher.rhythmAlgorithm(streamEl: Music21Object, searchEl: Music21Object)¶

- StreamSearcher.run() list[SearchMatch]¶

- StreamSearcher.wildcardAlgorithm(streamEl: Music21Object, searchEl: Music21Object)¶

An algorithm that supports Wildcards – added by default to the search function.

Wildcard¶

- class music21.search.base.Wildcard(**keywords)¶

An object that may have some properties defined, but others not that matches a single object in a music21 stream. Equivalent to the regular expression “.”

>>> wc1 = search.Wildcard() >>> wc1.pitch = pitch.Pitch('C') >>> st1 = stream.Stream() >>> st1.append(note.Note('D', type='half')) >>> st1.append(wc1)

Wildcard bases

Wildcard read-only properties

Read-only properties inherited from Music21Object:

Read-only properties inherited from ProtoM21Object:

Wildcard read/write properties

Read/write properties inherited from Music21Object:

Wildcard methods

Methods inherited from Music21Object:

Wildcard instance variables

Instance variables inherited from Music21Object:

WildcardDuration¶

- class music21.search.base.WildcardDuration(typeOrDuration: str | OffsetQLIn | DurationTuple | None = None, /, *, type: str | None = None, dots: int | None = 0, quarterLength: OffsetQLIn | None = None, durationTuple: DurationTuple | None = None, components: Iterable[DurationTuple] | None = None, client: base.Music21Object | None = None, **keywords)¶

a wildcard duration (it might define a duration in itself, but the methods here will see that it is a wildcard of some sort)

No difference from any other duration.

WildcardDuration bases

WildcardDuration read-only properties

Read-only properties inherited from Duration:

Read-only properties inherited from ProtoM21Object:

WildcardDuration read/write properties

Read/write properties inherited from Duration:

WildcardDuration methods

Methods inherited from Duration:

WildcardDuration instance variables

Instance variables inherited from Duration:

Functions¶

- music21.search.base.approximateNoteSearch(thisStream, otherStreams)¶

searches the list of otherStreams and returns an ordered list of matches (each stream will have a new property of matchProbability to show how well it matches)

>>> s = converter.parse("tinynotation: 4/4 c4 d8 e16 FF a'4 b-") >>> o1 = converter.parse("tinynotation: 4/4 c4 d8 e GG a' b-4") >>> o1.id = 'o1' >>> o2 = converter.parse("tinynotation: 4/4 d#2 f A a' G b") >>> o2.id = 'o2' >>> o3 = converter.parse("tinynotation: 4/4 c8 d16 e32 FF32 a'8 b-8") >>> o3.id = 'o3' >>> l = search.approximateNoteSearch(s, [o1, o2, o3]) >>> for i in l: ... print(f'{i.id} {i.matchProbability!r}') o1 0.666666... o3 0.333333... o2 0.083333...

- music21.search.base.approximateNoteSearchNoRhythm(thisStream, otherStreams)¶

searches the list of otherStreams and returns an ordered list of matches (each stream will have a new property of matchProbability to show how well it matches)

>>> s = converter.parse("tinynotation: 4/4 c4 d8 e16 FF a'4 b-") >>> o1 = converter.parse("tinynotation: 4/4 c4 d8 e GG a' b-4") >>> o1.id = 'o1' >>> o2 = converter.parse("tinynotation: 4/4 d#2 f A a' G b") >>> o2.id = 'o2' >>> o3 = converter.parse("tinynotation: 4/4 c4 d e GG CCC r") >>> o3.id = 'o3' >>> l = search.approximateNoteSearchNoRhythm(s, [o1, o2, o3]) >>> for i in l: ... print(f'{i.id} {i.matchProbability!r}') o1 0.83333333... o3 0.5 o2 0.1666666...

- music21.search.base.approximateNoteSearchOnlyRhythm(thisStream, otherStreams)¶

searches the list of otherStreams and returns an ordered list of matches (each stream will have a new property of matchProbability to show how well it matches)

>>> s = converter.parse("tinynotation: 4/4 c4 d8 e16 FF a'4 b-") >>> o1 = converter.parse("tinynotation: 4/4 c4 d8 e GG a' b-4") >>> o1.id = 'o1' >>> o2 = converter.parse("tinynotation: 4/4 d#2 f A a' G b") >>> o2.id = 'o2' >>> o3 = converter.parse("tinynotation: 4/4 c4 d e GG CCC r") >>> o3.id = 'o3' >>> l = search.approximateNoteSearchOnlyRhythm(s, [o1, o2, o3]) >>> for i in l: ... print(f'{i.id} {i.matchProbability!r}') o1 0.5 o3 0.33... o2 0.0

- music21.search.base.approximateNoteSearchWeighted(thisStream, otherStreams)¶

searches the list of otherStreams and returns an ordered list of matches (each stream will have a new property of matchProbability to show how well it matches)

>>> s = converter.parse("tinynotation: 4/4 c4 d8 e16 FF a'4 b-") >>> o1 = converter.parse("tinynotation: 4/4 c4 d8 e GG2 a' b-4") >>> o1.id = 'o1' >>> o2 = converter.parse("tinynotation: 4/4 AAA4 AAA8 AAA16 AAA16 AAA4 AAA4") >>> o2.id = 'o2' >>> o3 = converter.parse("tinynotation: 4/4 c8 d16 e32 FF32 a'8 b-8") >>> o3.id = 'o3' >>> o4 = converter.parse("tinynotation: 4/4 c1 d1 e1 FF1 a'1 b-1") >>> o4.id = 'o4' >>> l = search.approximateNoteSearchWeighted(s, [o1, o2, o3, o4]) >>> for i in l: ... print(f'{i.id} {i.matchProbability!r}') o3 0.83333... o1 0.75 o4 0.75 o2 0.25

- music21.search.base.mostCommonMeasureRhythms(streamIn, transposeDiatonic=False)¶

returns a sorted list of dictionaries of the most common rhythms in a stream where each dictionary contains:

number: the number of times a rhythm appears rhythm: the rhythm found (with the pitches of the first instance of the rhythm transposed to C5) measures: a list of measures containing the rhythm rhythmString: a string representation of the rhythm (see translateStreamToStringOnlyRhythm)

>>> bach = corpus.parse('bwv1.6') >>> sortedRhythms = search.mostCommonMeasureRhythms(bach) >>> for in_dict in sortedRhythms[0:3]: ... number = in_dict['number'] ... rhythmString = in_dict['rhythmString'] ... measures = in_dict['measures'] ... print( ... f'no: {number} ' ... f'rhythmString: {rhythmString}' ... ) ... bars = [ ... (m.number, str(m.getContextByClass(stream.Part).id)) ... for m in measures ... ] ... print(f'bars: {bars!r}') ... in_dict['rhythm'].show('text') ... print('-----') no: 34 rhythmString: PPPP bars: [(1, 'Soprano'), (2, 'Soprano'), (3, 'Soprano'), ..., (1, 'Alto'), ..., (10, 'Bass')] {0.0} <music21.note.Note C> {1.0} <music21.note.Note A> {2.0} <music21.note.Note F> {3.0} <music21.note.Note C> ----- no: 7 rhythmString: ZZ bars: [(13, 'Soprano'), (14, 'Soprano'), ..., (14, 'Bass')] {0.0} <music21.note.Note C> {2.0} <music21.note.Note A> ----- no: 6 rhythmString: ZPP bars: [(6, 'Soprano'), (12, 'Soprano'), ..., (18, 'Tenor'), ... (12, 'Bass')] {0.0} <music21.note.Note C> {2.0} <music21.note.Note B-> {3.0} <music21.note.Note B-> -----

Changed in v7: bars are ordered first by number, then by part.

- music21.search.base.noteNameRhythmicSearch(thisStreamOrIterator, searchList)¶

>>> thisStream = converter.parse('tinynotation: 3/4 c4 d8 e c d e f c D E c c4 d# e') >>> searchList = [note.Note('C'), note.Note('D'), note.Note('E')] >>> for n in searchList: ... n.duration.type = 'eighth' >>> thisStreamIter = thisStream.recurse().notes

>>> search.noteNameRhythmicSearch(thisStreamIter, searchList) [3, 7]

>>> searchList[0].duration = search.WildcardDuration() >>> search.noteNameRhythmicSearch(thisStreamIter, searchList) [0, 3, 7]

- music21.search.base.noteNameSearch(thisStreamOrIterator, searchList)¶

>>> thisStream = converter.parse('tinynotation: 3/4 c4 d8 e c d e f c D E c c4 d# e') >>> searchList = [note.Note('C'), note.Note('D'), note.Note('E')] >>> thisStreamIter = thisStream.recurse().notes

>>> search.noteNameSearch(thisStreamIter, searchList) [0, 3, 7] >>> searchList2 = [note.Note('C'), search.Wildcard(), note.Note('E')] >>> search.noteNameSearch(thisStreamIter, searchList2) [0, 3, 7, 11]

- music21.search.base.rhythmicSearch(thisStreamOrIterator, searchList)¶

Takes two streams – the first is the stream to be searched and the second is a stream of elements whose rhythms must match the first. Returns a list of indices which begin a successful search.

searches are made based on quarterLength. thus a dotted sixteenth-note and a quadruplet (4:3) eighth will match each other.

Example 1: First we will set up a simple stream for searching:

>>> thisStream = converter.parse('tinynotation: 3/4 c4. d8 e4 g4. a8 f4. c4. r4') >>> thisStream.show('text') {0.0} <music21.stream.Measure 1 offset=0.0> {0.0} <music21.clef.TrebleClef> {0.0} <music21.meter.TimeSignature 3/4> {0.0} <music21.note.Note C> {1.5} <music21.note.Note D> {2.0} <music21.note.Note E> {3.0} <music21.stream.Measure 2 offset=3.0> {0.0} <music21.note.Note G> {1.5} <music21.note.Note A> {2.0} <music21.note.Note F> {6.0} <music21.stream.Measure 3 offset=6.0> {0.5} <music21.note.Note C> {2.0} <music21.note.Rest quarter> {3.0} <music21.bar.Barline type=final>

Now we will search for all dotted-quarter/eighth elements in the Stream:

>>> thisStreamIter = thisStream.recurse().notes

>>> searchStream1 = stream.Stream() >>> searchStream1.append(note.Note(quarterLength=1.5)) >>> searchStream1.append(note.Note(quarterLength=0.5))

>>> l = search.rhythmicSearch(thisStreamIter, searchStream1) >>> l [0, 3] >>> stream.Stream(thisStreamIter[3:5]).show('text') {0.0} <music21.note.Note G> {1.5} <music21.note.Note A>

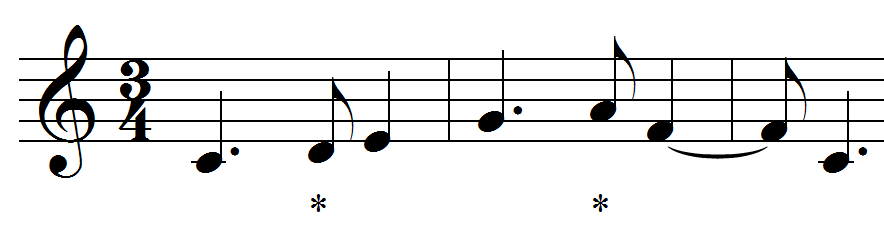

Slightly more advanced search: we will look for any instances of eighth, followed by a note (or other element) of any length, followed by a dotted quarter note. Again, we will find two instances; this time we will tag them both with a TextExpression of “*” and then show the original stream:

>>> searchStream2 = stream.Stream() >>> searchStream2.append(note.Note(quarterLength=0.5)) >>> searchStream2.append(search.Wildcard()) >>> searchStream2.append(note.Note(quarterLength=1.5)) >>> l = search.rhythmicSearch(thisStreamIter, searchStream2) >>> l [1, 4] >>> for found in l: ... thisStreamIter[found].lyric = '*' >>> thisStream.show()

Now we can test the search on a real dataset and show the types of preparation that are needed to make it most likely a success. We will look through the first movement of Corelli Trio Sonata op. 3 no. 1 (F major) looking to see how much more common the first search term (dotted-quarter, eighth) is than the second (eighth, anything, dotted-quarter). In fact, my hypothesis was wrong, and the second term is actually more common than the first! (n.b. rests are being counted here as well as notes)

>>> grave = corpus.parse('corelli/opus3no1/1grave') >>> term1results = [] >>> term2results = [] >>> for p in grave.parts: ... pf = p.flatten().stripTies().notesAndRests # consider tied notes as one long note ... temp1 = search.rhythmicSearch(pf, searchStream1) ... temp2 = search.rhythmicSearch(pf, searchStream2) ... for found in temp1: ... term1results.append(found) ... for found in temp2: ... term2results.append(found) >>> term1results [0, 7, 13, 21, 42, 57, 64, 66, 0, 5, 7, 19, 21, 40, 46, 63, 0, 8, 31, 61, 69, 71, 73, 97] >>> term2results [5, 29, 95] >>> float(len(term1results)) / len(term2results) 8.0

- music21.search.base.streamSearchBase(thisStreamOrIterator, searchList, algorithm=None)¶

A basic search function that is used by other search mechanisms, which takes in a stream or StreamIterator and a searchList or stream and an algorithm to run on each pair of elements to determine if they match.

- music21.search.base.translateDiatonicStreamToString(inputStreamOrIterator, returnMeasures=False)¶

Translates a Stream or StreamIterator of Notes and Rests only into a string, encoding only the .step (no accidental or octave) and whether the note is slower, faster, or the same speed as the previous note.

Skips all but the first note of tie. Skips multiple rests in a row

Each note gets one byte:

A-G = note of same length as previous H-N = note of longer length than previous O-U = note of shorter length than previous Z = rest

>>> s = converter.parse("tinynotation: 3/4 c4 d8~ d16 r16 FF8 F#8 a'8 b-2.") >>> streamString = search.translateDiatonicStreamToString(s.recurse().notesAndRests) >>> print(streamString) CRZFFAI >>> len(streamString) 7

If returnMeasures is True, returns an array of measureNumbers where each entry represents the measure number of the measure of the object at that character position :

>>> streamString2, measures = search.translateDiatonicStreamToString(s.recurse().notesAndRests, ... returnMeasures=True) >>> streamString == streamString2 True >>> measures [1, 1, 1, 1, 1, 2, 2]

- music21.search.base.translateDurationToBytes(n: GeneralNote)¶

takes a note.Note object and translates it to a two-byte representation

currently returns the chr() for the note’s midi number. or chr(127) for rests followed by the log of the quarter length (fitted to 1-127, see formula below)

>>> n = note.Note('C4') >>> n.duration.quarterLength = 3 # dotted half >>> trans = search.translateDurationToBytes(n) >>> trans '_' >>> (2 ** (ord(trans[0]) / 10)) / 256 # approximately 3 2.828...

- music21.search.base.translateIntervalsAndSpeed(inputStream, returnMeasures=False)¶

Translates a Stream (not StreamIterator) of Notes and Rests only into a string, encoding only the chromatic distance from the last note and whether the note is slower, faster, or the same speed as the previous note.

Skips all but the first note of tie. Skips multiple rests in a row

Each note gets one byte and encodes up from -13 to 13 (all notes > octave are 13 or -13)

>>> s = converter.parse("tinynotation: 3/4 c4 d8~ d16 r16 F8 F#8 F8 a'8 b-2") >>> sn = s.flatten().notesAndRests.stream() >>> streamString = search.translateIntervalsAndSpeed(sn) >>> print(streamString) Ib RHJ<9 >>> print([ord(x) for x in streamString]) [73, 98, 32, 82, 72, 74, 60, 57] >>> len(streamString) 8

If returnMeasures is True, returns a triplet of whether the last note was a rest, whether the last note was tied, what the last quarterLength was, and what the last pitches’ midi number was

which can be fed back into this algorithm:

>>> streamString2, measures = search.translateIntervalsAndSpeed(sn, returnMeasures=True) >>> streamString == streamString2 True >>> measures [1, 1, 1, 1, 1, 2, 2, 2]

- music21.search.base.translateNoteTieToByte(n: GeneralNote)¶

takes a note.Note object and returns a one-byte representation of its tie status. ‘s’ if start tie, ‘e’ if stop tie, ‘c’ if continue tie, and ‘’ if no tie

>>> n = note.Note('E') >>> search.translateNoteTieToByte(n) ''

>>> n.tie = tie.Tie('start') >>> search.translateNoteTieToByte(n) 's'

>>> n.tie.type = 'continue' >>> search.translateNoteTieToByte(n) 'c'

>>> n.tie.type = 'stop' >>> search.translateNoteTieToByte(n) 'e'

- music21.search.base.translateNoteToByte(n: GeneralNote) str¶

takes a note.Note object and translates it to a single byte representation in a string.

currently returns the chr() for the note’s midi number. or chr(127) for rests and unpitched.

>>> n = note.Note('C4') >>> b = search.translateNoteToByte(n) >>> b '<' >>> ord(b) 60 >>> ord(b) == n.pitch.midi True

Chords are currently just searched on the first Note (or treated as a Rest if None)

- music21.search.base.translateNoteWithDurationToBytes(n: GeneralNote, includeTieByte: bool = True) str¶

takes a note.Note object and translates it to a three-byte representation as a string.

currently returns the chr() for the note’s midi number. or chr(127) for rests followed by the log of the quarter length (fitted to 1-127, see

translateDurationToBytes()) followed by ‘s’, ‘c’, or ‘e’ if includeTieByte is True and there is a tie.>>> n = note.Note('C4') >>> n.duration.quarterLength = 3 # dotted half >>> trans = search.translateNoteWithDurationToBytes(n) >>> trans '<_' >>> (2**(ord(trans[1])/10.0))/256 # approximately 3 2.828...

>>> n.tie = tie.Tie('stop') >>> trans = search.translateNoteWithDurationToBytes(n) >>> trans '<_e'

>>> trans = search.translateNoteWithDurationToBytes(n, includeTieByte=False) >>> trans '<_'

- music21.search.base.translateStreamToString(inputStreamOrIterator, returnMeasures=False)¶

takes a stream (or streamIterator) of notesAndRests only and returns a string for searching on.

>>> s = converter.parse("tinynotation: 3/4 c4 d8 r16 FF8. a'8 b-2.") >>> sn = s.flatten().notesAndRests >>> streamString = search.translateStreamToString(sn) >>> print(streamString) <P>F<)KQFF_ >>> len(streamString) 12

Chords give the pitch only of the first note and Unpitched objects are treated as rests:

>>> s = stream.Stream([note.Note('C4'), note.Rest(), ... chord.Chord(['C4', 'E4']), note.Unpitched()]) >>> streamString = search.translateStreamToString(s) >>> list(streamString.encode('utf-8')) [60, 80, 127, 80, 60, 80, 127, 80]

- music21.search.base.translateStreamToStringNoRhythm(inputStream, returnMeasures=False)¶

takes a stream or streamIterator of notesAndRests only and returns a string for searching on, using translateNoteToByte.

>>> s = converter.parse("tinynotation: 4/4 c4 d e FF a'2 b-2") >>> sn = s.flatten().notesAndRests >>> search.translateStreamToStringNoRhythm(sn) '<>@)QF'

With returnMeasures, will return a tuple of bytes and a list of measure numbers:

>>> search.translateStreamToStringNoRhythm(sn, returnMeasures=True) ('<>@)QF', [1, 1, 1, 1, 2, 2])

- music21.search.base.translateStreamToStringOnlyRhythm(inputStream, returnMeasures=False)¶

takes a stream or streamIterator of notesAndRests only and returns a string for searching on.

>>> s = converter.parse("tinynotation: 3/4 c4 d8 e16 FF8. a'8 b-2.") >>> sn = s.flatten().notesAndRests >>> streamString = search.translateStreamToStringOnlyRhythm(sn) >>> print(streamString) PF<KF_ >>> len(streamString) 6